It's time for another in the series about Ila. This time we'll look at the var type and the Var[t] family of classes.

What is Ila? In January 1998 Uday Reddy presented Objects and Classes in Algol-Like Languages (refined in 2000). In Spring of 1998 I found myself Reddy's M.S. student, designing and implementing a call-by-value variant of the IA+ language presented there. I've taken to calling the implemented language Ila in this blog.

Second Class Objects

Let's look at another case of "everything is an object -- except when it's not." Pop quiz: how many objects do you see:

a = 1

If we take this to be from a language where "everything is an object", then there is at least one object: the integer 1. But what about

a? Some would say it's just a name or reference to the object 1. But think about it this way. After this assignment, somewhere between the name

a and the object 1 is a container. A container you can't "get your hands on": a container which can't be created on the fly anonymously or returned from functions; in short, a second-class citizen in the language, and furthermore not an object.

Notice I didn't say this language (which could be any one of "most" languages today) doesn't let you create containers. In fact, the simplest of all useful classes could be a simple container:

class Box

{

Object contents;

Object getContents() { return this.contents; }

Object setContents(Object src) { return this.contents=src; }

}

In order to be at all interesting, objects must have instance variables, some form of local state. Why not make variables be primitive classes?

References and Boxes

Before we look at what this even means and how this technique plays out in Ila, I did want to make a related work note. Explicit storage cell type is certainly out there, the

ref types is part of what's biting

Robot Monkey's shiny metal posterior, and there are others like

box in Scheme. The difference with this approach is that var is an

object. (I know, tortured sighs from certain parties. But for some people this represents the leveraging power of orthogonality. Ok. Groans from other corners. All right, let's move on.)

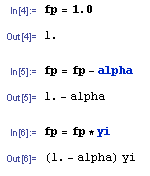

Var[t] - Variables as Objects

First, the formal introduction. In Reddy's IA+ paper, there was a

var t polymorphic type and a family of classes

Var[t] implementing it. The object signature for

var t is

{get : unit -> t, set : proc t}. So, given a variable object

v we retrieve with

v.get() and assign to it with

v.set(k). That's the object syntax. As a built-in type it also can have special syntactic treatment to provide the more usual forms.

How do you declare a variable? Well, it's an object so you should be asking how you instantiate a new variable. More specifically, how you get a new

Var[t] object. The

Var[t] notation is generics syntax, you'd write

Var[int] or

Var[NuclearSubmarine] to talk about the classes for variables of specific types.) In Ila, the syntax for a new object has the form

new id isa class-expr where

id is the object name, in scope to the end of the basic block, and

class-expr is a class-typed expression. More about class-typed expressions another time, here we'll just write the names of classes.

Putting it all together, here's a snippet of code using variables:

new usrname isa Var[str];

readstr("Hello, what's your name?",usrname);

println("Pleased to meet you, "+usrname+".");

println "Would you like to play a game?";

new score isa Var[int];

score := 0;

Aside from the

new username isa Var[str] syntax, which is a little obnoxious and could be reduced to something like

var username, the variable usage is entirely ordinary. Here we see

1. Implicit

.get() call in the string expression

"Pleased to meet you, "+usrname+".".

2. Implicit

.set call in

score := 0.

3. Passing the whole container so it can get updated in

readstr("Hello, what's your name?",username).

To see how these work, we need to look at the types. In Ila, the type of an object is the type of its class signature, so

usrname has the type

{get : unit -> str, set : proc str}.

Let's look at these again in slow motion.

1. In

username+".", we have something added to a string literal. In Ila,

+ is overloaded, so we only have a few choices, and from the string literal we resolve this to be the string

+ operator. So, the expression

username is expected to be a string. But, it is not a string, it's a variable containing a string. What makes this work is a subtype relation in the type system and a coercion at runtime. The type

var[t] is a subtype of

t, and at runtime variables of

t are coerced to be values of type

t by calling the

get method.

In other words,

username+"." is implicitly turned into

username.get()+".".

2. Assignments like

score := 0 is simply syntactic sugar for

score.set(0). The only other thing worth noting here is that there's nothing very vague or shadowy about what is an lvalue. The only things that can go on the left of the assignment are things with type

var[t], or more correctly things for which

var[t] is a subtype. If you wrote some arbitrary class and gave it a

set method, you could put it on the left of an assignment operator.

3. Passing values of type

var[t] to functions and procedures is similar to the first case, but you will want to know the type of the function or procedure's argument. If the type it expects is

var[t], look out (as in the "bang" of Scheme's

set!): it means the variable is being passed as a container object and its contents could be modified. In the case of the built-in procedure

readstr this is expected.

Conclusion: Translation to Java

The Ila implementation needed to represent the

Var[t] class in Java in a way consistent with the rest of the language. It was not possible to directly use Java local variables. As an optimization, yes, when their use matched the semantics of Java local variables. But as the general translation, I simply had to make a

iap.lang.Var container class basically like the

Box class mentioned earlier. This is probably where the idea breaks down, and I'm sure some readers have thought of this point already. Who wants to pay a method dispatch cost for every variable access?

If you tend to have a lot of getters and setters in your classes, or if you're attaching listener management methods for lots of an object's state, consider that a variable object could be one place to hang all that functionality, as methods of the variable itself instead of methods of its containing class. Try

obj.field.addListener and

obj.field.set on for size instead of

obj.addFieldListener and

obj.setField. To the degree that "everything is an object", that universal orthogonality, is useful, to the degree that it is useful for metaprogramming, this technique deserves some consideration.