In "Dependent Iteration" we looked at a small numeric program given in imperative programming style and wondered how it might look in functional or hybrid environments, and whether it would perform well. I felt the RC filter algorithm posed some challenges for being elegantly expressed in a functional style, in a way that might be appealing to procedural programmers but that also is fast. In "RC Filter with Python and Java" we got some real numbers for an input file of about 1.2 million entries. What I really wanted to try was a compiled functional language, like OCaml. So, now we can add OCaml, procedural and functional variants, to our previous results (note Psyco-enhanced Python was added, see post comments):

| Language | Total | Time/Iteration |

| Java | 29 ms | 24 ns/iteration |

| OCaml native procedural | 56 ms | 47 ns/iteration |

| OCaml bytecode procedural | 547 ms | 456 ns/iteration |

| OCaml native functional | 940 ms | 783 ns/iteration |

| Python with Psyco | 588 ms | 490 ns/iteration |

| Python | 1507 ms | 1255 ns/iteration |

| OCaml bytecode functional | 1692 ms | 1410 ns/iteration |

I was surprised to see OCaml not beat Java. I am using the OCaml compiled for the Microsoft toolchain, for which there is a release note about degraded performance from lack of computed gotos; maybe that's it. It was interesting to see the bytecode times so similar to the Psyco-enhanced Python version. The functional version is just a bit disappointing, partly because I had to reverse the list.

Let's take a look at the code, and some of the practical things I learned about getting started with OCaml.

Fractal Benchmark Page

My favorite resource for this post is this

Fractal Benchmark page, from which I gleaned two clues about OCaml:

1. Fine-grain timing (under a second) can be done with

Unix.gettimeofday().

2. OCaml has

for loops:

for i = 1 to 10 do

print_int i

done

Using OCaml Modules

If you bother to read the documentation ahead of time, you won't get caught here. But silly me, given the preceding benchmark code and the fact that the

Unix module is in the OCaml library documentation, I assumed

Unix.gettimeofday() would work out of the box.

No, if you want to use the

Unix module, and you're running the ocaml interpreter, you need to first use the

#load (that's "pound load", one word, not the "#" prompt followed by a load command) directive:

#load "unix.cma";;

You have to know to use the lower-case name "unix" to load a module with the capitalized name "Unix", and you have to know the special file extension ".cma". Did I mention that you need to do this with some modules, but not others which come pre-loaded, and I'm not sure I can tell you which ones are like this.

I didn't really find this the most user-friendly introduction to getting something done, you can draw your own conclusions, but the cherry on top was the next point.

If you want to compile source code, you must

not have a

#load directive in the source code. Presumably one can load source code into the interpreter, as you can with SML/NJ's

use command, and if so, does that mean you need to manually type the

#load(s) first? This seems incomprehensible. Obviously not a showstopper issue to the OCaml community, one way or other.

What you

do need to do if compiling with a module like Unix is to mention its compiled module file to the compiler. I needed to list the module before my source file. Here's my bytecode compile command:

ocamlc -o lp.exe unix.cma lp.ml

Using the Native Compiler

I had installed OCaml for the Microsoft toolchain. I needed to do just a couple setup things:

1. Make sure the Visual C bin directory is on the path:

set PATH=C:\Program Files\Microsoft Visual Studio 8\VC\bin;%PATH

2. Make sure the

LIB environment variable has a path of the Microsoft library directories:

set LIB=C:\Program Files\Microsoft Visual Studio 8\VC\lib;C:\Program Files\Microsoft Visual Studio 8\VC\PlatformSDK\Lib

If you don't have #1 right, you get a poignantly confusing error message:

'ml' is not recognized as an internal or external command,

operable program or batch file.

Assembler error, input left in file C:\DOCUME~1\jfkbits\LOCALS~1\Temp\camlasm705c28.asm

The "ml" mentioned here is the Microsoft Assembler, not any ML language thing.

The compile command for the native compiler is like that for the bytecode compiler, only you change

.cma files to

.cmxa:

ocamlopt -o lpopt.exe unix.cmxa lp.ml

Procedural Code

Without further ado, the procedural code:

let lowpassProcedural x dt rc =

let n = Array.length x in

let y = Array.make n 0.0 in

let alpha = dt /. (rc +. dt) in

begin

Array.set y 0 (Array.get x 0);

for i = 1 to n-1 do

Array.set y i (alpha *. (Array.get x i) +. ((1.0 -. alpha) *. (Array.get y (i-1))));

done;

y

end;;

This is about as close as it gets to the universal procedural implementation: arrays and for/do loops. Notice that overloading is not to be found in OCaml, so the floating-point operators have identifiers like

+. and

*..

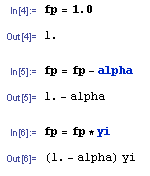

Functional Code with Fold

The correct code:

let lowpassFunctionalFold x dt rc =

let x0 = List.hd x in

let alpha = dt /. (rc +. dt) in

let helper acc xi =

match acc with (yprev,ys) ->

let yi = alpha *. xi +. ((1.0 -. alpha) *. yprev) in

(* (yi,ys @ [yi]) don't do this *)

(yi, yi::ys)

in

match List.fold_left helper (x0,[]) x with

(_,acc) -> List.rev acc (* rev accounts for about 40% of the running time *)

;;

Turn your attention to the last line of

helper where the returned list is constructed. The given implementation uses

:: (cons) and the result is reversed at the end. The reverse is done to give the proper ordering of the output list when the list elements are visited first to last as with

fold_left. Note that we must visit first to last, because the RC filter algorithm is specified that way. (Even if it works to filter a signal with time reversed, I'm looking for code that can be explained to those familiar with the subject.) This means we can't use

fold_right.

As noted in the source, I originally wrote this using

@ (list append) to tack elements onto the end of the list. But this is exactly what the documentation is warning about when it says "Not tail-recursive." I gave up timing that implementation. OCaml could use

snoc here (append to the end of a list, counter to cons which prepends).

There may be a better way to do this in OCaml using fold or similar list iterator. I was simply trying to get a reasonably compact functional definition that didn't degenerate into hand-coded recursion.

Updated: Added native compilation numbers and commentary.